In an era that calls for data-driven decision-making, Bayesian analysis stands tall as a powerful statistical tool capable of handling complexity and uncertainty. Tipped by many as the foundation upon which modern statistics stands, Bayesian analysis is a paradigm that interprets probability as a measure of credibility or confidence that an individual may possess about a particular hypothesis. Academic scholars and practitioners alike have found Bayesian methods invaluable across a range of disciplines, from machine learning to medicine.

The application of Bayesian principles allows for a systematic approach to integrate prior knowledge with new evidence, a feature particularly appealing in research where prior information is abundant. In this comprehensive guide, we will unravel the intricate layers of Bayesian analysis and its implications in contemporary research, ensuring that enthusiasts are well-equipped with a strong foundation to learn problem solving through a statistical lens.

Introduction to Bayesian Analysis

Definition of Bayesian Analysis

Bayesian Analysis is a statistical model that melds prior information about a parameter with new data to produce a posterior distribution, which is essentially an updated belief about the parameter's value. Bayes' theorem, the cornerstone of Bayesian methodology, manages to elegantly quantify the way in which one's belief should rationally change to account for evidence. In essence, Bayesian analysis offers a formal mathematical method for combining prior knowledge with current information to form a composite view of a studied phenomenon.

Brief history and development of Bayesian Analysis

The history of Bayesian Analysis dates back to Reverend Thomas Bayes, from whom the theory gets its name. Bayes' work laid the groundwork in the 18th century, but it was not until the 20th century that Bayesian methods burgeoned, mainly thanks to advances in computational technology. This evolution has allowed researchers to tackle complex and high-dimensional problems that were previously considered intractable. Bayesian Analysis has now become a staple in statistical education, often highlighted in online certificate courses for its universal applicability and robust approach to uncertainty.

Fundamental principles behind Bayesian Analysis

The fundamental principles behind Bayesian Analysis stand on the idea that all types of uncertainty can be represented using probability. A Bayesian framework treats unknown parameters as random variables with associated probability distributions rather than fixed but unknown quantities. This probabilistic approach allows for a flexible yet rigorous way to summarize information. The inference process is directly aligned with how human intuition works, where we naturally revise our views as new evidence is presented.

The significance of Bayesian Analysis in the field of Statistics

In the field of statistics, Bayesian Analysis has cemented its significance through its philosophical stance on probability. Contrary to classical frequentist statistics, which interprets probability strictly from the perspective of long-term frequency, Bayesian probability is subjective and based on personal belief, which can be scientifically updated with new data. This allows for a more nuanced and richer statistical analysis where both uncertainty and variability are captured. This significance is further heightened by Bayesian methods' flexibility in model formulation, as they are particularly adept at dealing with complex models that would be cumbersome under frequentist approaches.

The Mathematics of Bayesian Analysis

Overview of Probability Theory

The mathematical underpinning of Bayesian Analysis is inherently tied to concepts of probability theory. Fundamental to this is the probability space that sets the stage for any random process — consisting of a sample space, a set of possible outcomes, and a probability measure that assigns likelihood to these outcomes. Probability theory provides the rules and tools to maneuver through complex stochastic scenarios, laying the groundwork for any statistical modeling, including Bayesian.

Introduction to Bayes Theorem

Bayes' theorem is the mathematical engine of Bayesian analysis, elegantly linking the prior and the likelihood of observed data to the posterior distribution. In its simplest form, Bayes' theorem relates these elements in a proportional fashion, stating that the posterior is proportional to the likelihood of the evidence multiplied by the prior belief. This allows statisticians to start with a hypothesized distribution, grounded in prior knowledge, and revise it in light of new, incoming data.

Integrating Prior Beliefs with Probabilistic Evidence

In Bayesian Analysis, integrating prior beliefs with probabilistic evidence is a process that is both intuitive and mathematically rigorous. By using probability distributions to quantify both prior knowledge and the likelihood of observed data, researchers can construct a mathematically sound and cohesive framework for updating beliefs. The transformation of prior to posterior encapsulates the essence of learning from evidence, a cornerstone in the methodology.

Common misconceptions about the mathematical aspects of Bayesian Analysis

Despite its strong theoretical foundation, there can be common misconceptions regarding the mathematics of Bayesian Analysis. Some might view the use of prior knowledge as too subjective, leading to biased outcomes. However, in practice, objects and subjective priors can coexist within a Bayesian framework, with the objectivity of the analysis is maintained through principled model checking and validation. Furthermore, the intricacies of probability distributions might seem daunting; nonetheless, with adequate training and the support of modern computational tools, these can be navigated with finesse.

Understanding Priors

Explanation of Priors in Bayesian Analysis

Priors in Bayesian Analysis represent the quantification of prior knowledge or beliefs about a parameter before considering the current data. This prior distribution is what sets Bayesian methods apart from other statistical techniques, allowing the incorporation of expert opinion or results from previous studies. Priors can take various forms, ranging from non-informative, designed to minimally influence the analysis, to highly informative, which assert strong beliefs about parameter values.

The role of Priors in the decision-making process

The role of priors in the decision-making process is critical as they directly influence the posterior distribution and consequently, the decisions drawn from the analysis. In scenarios where data is scarce or noisy, the prior can provide a stabilizing effect, guiding the inference towards plausible values. Conversely, with abundant data, the influence of the prior diminishes and the data drive the results, illustrating the dynamic balance between belief and evidence in Bayesian Analysis.

Discussion on objective and subjective priors

Objective priors are designed to have minimal influence on the posterior distribution, thus affording the data maximum sway in the analysis. These are often uniform or reference priors, intending to reflect a state of ignorance about the parameters. Subjective priors, on the other hand, encode specific beliefs or knowledge about the parameters and are useful when prior information is robust and relevant. The choice between objective and subjective priors must be made based on context, and it is important to transparently report and justify the priors used in any Bayesian study.

Understanding Likelihood

Explanation of Likelihood in Bayesian Analysis

The likelihood is a function that describes how probable the observed data is, given a set of parameters. In Bayesian Analysis, it is used in conjunction with the prior distribution to update our belief about the parameters after seeing the data. The likelihood principle asserts that all the information in the data about the parameters is contained in the likelihood function.

The consideration of data evidence in underlying models

The careful consideration of data evidence is paramount to the integrity of underlying models in Bayesian Analysis. Likelihood functions need to be properly specified to ensure they adequately represent the process generating the data. If the likelihood is misspecified, this may lead to incorrect posterior distributions and misleading conclusions. This underscores the importance of having a thorough understanding of the probabilistic mechanisms at play in any given situation.

The role of Likelihood in calculating posterior probabilities

The role of the likelihood is pivotal in calculating posterior probabilities, as it serves as the weighted mechanism that updates the prior distribution. By evaluating the likelihood at different parameter values, we can gauge how well each parameter value explains the observed data. This weighting is what informs the shape of the posterior distribution, which, in a Bayesian context, provides the complete picture of our updated knowledge about the parameter of interest.

Understanding Posterior Distribution

Explanation of Posterior Distribution in Bayesian Analysis

The posterior distribution is the result of blending the prior distribution with the likelihood of the observed data within the Bayesian framework. This distribution embodies our updated belief about the parameter values after taking into account both the prior knowledge and the new evidence from the data. It serves as a comprehensive summary of what we know about the parameters, given everything at our disposal.

The transforming of prior beliefs with the help of evidence

The transformation of prior beliefs with the help of evidence is a testament to the dynamic nature of Bayesian Analysis. As new data becomes available, the posterior distribution evolves, reflecting an enhanced understanding that harmonizes both the extant knowledge and the recent evidence. This process represents an iterative learning cycle, central to adaptive decision-making and predictive modeling.

The process and significance of calculating posterior probabilities

Calculating posterior probabilities is not just a mathematical exercise; it bears significant implications for inferential statistics and predictive analysis. The posterior probabilities provide the full palette of possible parameter values, weighted by their plausibility in light of the data and prior beliefs. This forms the backbone of Bayesian decision-making, where actions are informed by a probabilistic assessment of all possible outcomes.

Application of Bayesian Analysis in Real World

Brief overview of different fields where Bayesian Analysis is applied

Bayesian Analysis finds utility in a wide array of fields, evidencing its versatility and adaptability. Its methods are employed in fields as diverse as genetics, where it helps in mapping genes, to environmental science, where it aids in the modeling of climate change. Economists use Bayesian Analysis to forecast market trends, while in the tech industry, it is fundamental to the development of robust artificial intelligence (AI) systems. Its widespread application is a testament to the methodology's ability to manage uncertainty and incorporate external knowledge into analytical models.

Real-life examples illustrating Bayesian Analysis

A practical realization of Bayesian Analysis can be seen in how search engines interpret user queries. They employ Bayesian inference to update the relevance of search results based on user interactions, thus personalizing the search experience. In another example, during the outbreak of an epidemic, public health officials use Bayesian models to predict disease spread, taking into account prior outbreaks and current data to inform policy decisions. These real-life examples underscore the utility of Bayesian methods in tackling complex problems with layers of uncertainty.

Current trends and advancements in the application of Bayesian Analysis

The current trends and advancements in the application of Bayesian Analysis are largely defined by the increasing computational power and the development of sophisticated simulation techniques, like Markov Chain Monte Carlo (MCMC) methods. These advancements have unlocked the potential for Bayesian Analysis to handle larger datasets and more complex models. Moreover, the advent of accessible software and platforms has democratized Bayesian methods, extending their reach across various disciplines.

Bayesian Analysis in Machine Learning and Artificial Intelligence

Explanation and significance of Bayesian methods in Machine Learning and AI

Bayesian methods have become increasingly significant in the realm of Machine Learning (ML) and AI, defining a subset colloquially known as Bayesian Machine Learning. These methods are prized for their ability to quantify uncertainty and for their principled way of incorporating prior knowledge into predictive models. Bayesian methods bring a level of rigor to AI that facilitates robust and interpretable decisions, characteristics that are vital as machine learning systems become more pervasive in critical domains.

Examples of machine learning algorithms that utilize Bayesian analysis

Some examples of machine learning algorithms that effectively utilize Bayesian Analysis include Bayesian Networks for capturing probabilistic relationships between variables, and Gaussian processes for non-parametric regression. Bayesian optimization is also an influential tool used for tuning hyperparameters in complex machine learning models. These are among a repertoire of Bayesian tools that are shaping contemporary ML and AI.

Bayesian Analysis in Medical and Health Sector

Discussion of how Bayesian Analysis is used to analyze clinical trials and medical data

In the medical and health sector, Bayesian Analysis plays a crucial role in the analysis of clinical trials and medical data. It provides a framework where prior clinical knowledge can be systematically incorporated, potentially leading to more efficient trial designs and more precise estimation of treatment effects. Additionally, Bayesian approaches to predictive modeling can account for patient heterogeneity, tailoring medical predictions and decisions to individual patient profiles.

Example cases depicting use of Bayesian techniques in important medical decisions

Bayesian techniques have been instrumental in many important medical decisions, such as determining the optimal dosage of a new drug or assessing the risk of disease recurrence in a patient. By incorporating the uncertainty in these decisions and allowing the use of prior information, doctors and medical researchers can make more informed and tailored healthcare decisions. This has profound implications for personalized medicine, a field that is rapidly growing in light of advancements in genomics and biotechnology.

Strengths and Limitations of Bayesian Analysis

Detailed discussion of pros and cons of using Bayesian methods

Bayesian Analysis brings a host of strengths including the ability to incorporate prior knowledge, quantify uncertainty, and continually update conclusions as new data is observed. However, it is not without its limitations. The subjective nature of choosing priors can lead to controversy, despite the fact that objective methods to choose priors are being developed. Additionally, Bayesian computations can be intensive, and the interpretability of results may require a deeper understanding of statistical theory than some frequentist methods.

Direct and indirect comparisons with other statistical methods — such as frequentist statistics

Directly comparing Bayesian methods with frequentist approaches can illuminate their distinct philosophical underpinnings. Bayesian methods are inherently subjective and probabilistic in nature, considering parameters as random variables. In contrast, frequentist methods avoid probability in the absence of random processes and rely on long-term frequencies. While Bayesian Analysis offers a dynamic and coherent approach to inference, the frequentist paradigm remains popular due to its long history and the straightforward interpretation of confidence intervals and hypothesis tests.

Conclusion

Recap of major points discussed throughout the blog

This comprehensive guide has traversed the core principles and mathematical underpinnings of Bayesian Analysis, emphasizing its significance in the broader field of statistics. We have explored how Bayesian methods responsibly integrate prior beliefs with new evidence, overcome common misconceptions, and apply robustly across various industries. Real-world examples have illustrated the tangible impact of Bayesian methods, and their growing importance in AI and personalized medicine has been highlighted. Moreover, the discussion on the strengths and limitations of Bayesian Analysis offered a balanced viewpoint.

Forecast of future developments in the field of Bayesian Analysis

The future developments in Bayesian Analysis are expected to be influenced by the continued rise of computational capabilities and further integration of Bayesian methods into diverse fields. The burgeoning data environments will amplify the need for statistical models that can handle high-dimensionality and complex dependencies among variables, an arena where Bayesian Analysis naturally excels.

Final remarks on the importance of understanding and implementing Bayesian Analysis

In conclusion, understanding and implementing Bayesian Analysis is paramount in this data-centric world. Whether through formal education or via online certificate courses, enriching one's statistical toolkit with Bayesian methods will be invaluable in navigating today’s complex data landscapes. By embracing these techniques, researchers and practitioners can look forward to a richer interpretation of data and more informed decision-making across the spectrum of scientific inquiry.

Frequently Asked Questions

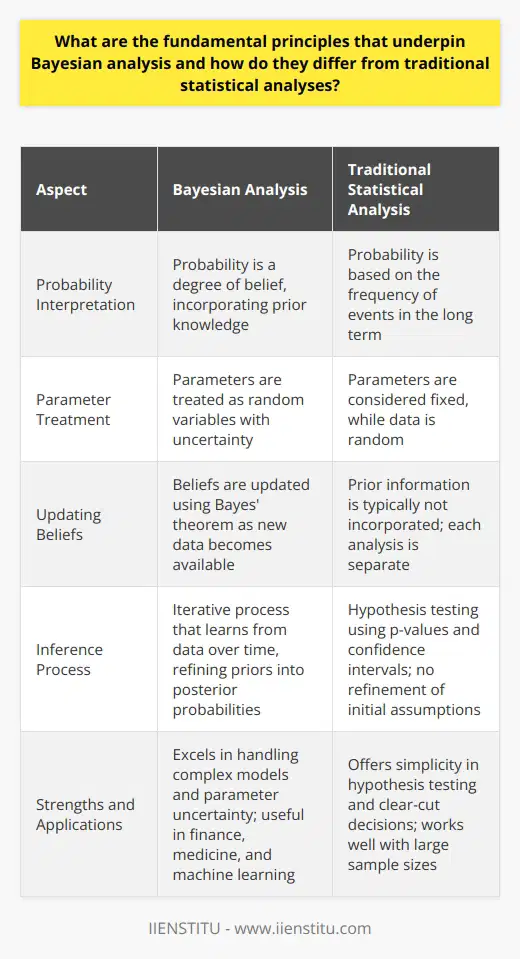

What are the fundamental principles that underpin Bayesian analysis and how do they differ from traditional statistical analyses?

Bayesian Fundamentals

Bayesian analysis stands on distinct principles. It contrasts with frequentist statistics. Both hold different views on probability. The Bayesian approach is probabilistic. It treats probability as a degree of belief. In this belief, prior knowledge matters. We express this knowledge with prior probabilities.

Traditional analyses differ. These rely on frequency of events. They consider long-term behavior. Probabilities are strictly about sample frequencies. Prior information is not typically incorporated. In frequentist paradigms, parameters are fixed. Data is random.

Bayesian methods incorporate new data smoothly. They update beliefs through Bayes' theorem. This theorem is a mathematical formula. It revises probabilities with new evidence. The formula is P(H|E) = (P(E|H) * P(H)) / P(E). Each component of this formula is crucial.

Bayes’ Theorem Components

- P(H|E): Probability of hypothesis given evidence.

- P(E|H): Probability of evidence under hypothesis.

- P(H): Prior probability of hypothesis.

- P(E): Probability of evidence.

Differences in Analysis

Bayesian analysis sees parameters as uncertain. They are random quantities. Priors and likelihoods are essential. Priors capture existing beliefs before data. Likelihoods involve the chance of seeing the observed data. These are flexible. They can adapt as new data comes in.

Frequentist analysis sets parameters as fixed. It does not use priors. The data is the sole source of information. Tests like null hypothesis significance testing are common. P-values are used to reject or fail to reject hypotheses. Confidence intervals give range estimations. These are for a given level of confidence.

Bayesian Inference

Bayesian inference is iterative. It learns from data over time. It refines priors into posterior probabilities. Posterior reflects updated beliefs. It does so after considering the new data. This process accumulates knowledge. Each cycle through the data improves understanding. It converges on the true parameter values.

Contrast this with classical methods. These do not refine initial assumptions. Results from different experiments are separate. They do not build upon each other. Each test is a fresh slate. It lacks the continuity of Bayesian updating.

Practical Applications

Bayesian methods excel in complex models. They handle parameter uncertainty well. This is useful in many fields. Finance, medicine, and machine learning are examples. They provide a coherent framework. They quantify uncertainty in predictions.

Frequentist methods remain popular. They offer simplicity in hypothesis testing. They are good for clear-cut decisions. They work well when large sample sizes are available. Bayesian methods can become computationally intense. This is especially true with complex or numerous priors.

In conclusion, Bayesian principles emphasize learning from data. They merge old knowledge with new evidence. Traditional statistics focus on data analysis with set parameters. Both have their places. Their best use depends on context. Understanding both is crucial for statisticians and data scientists.

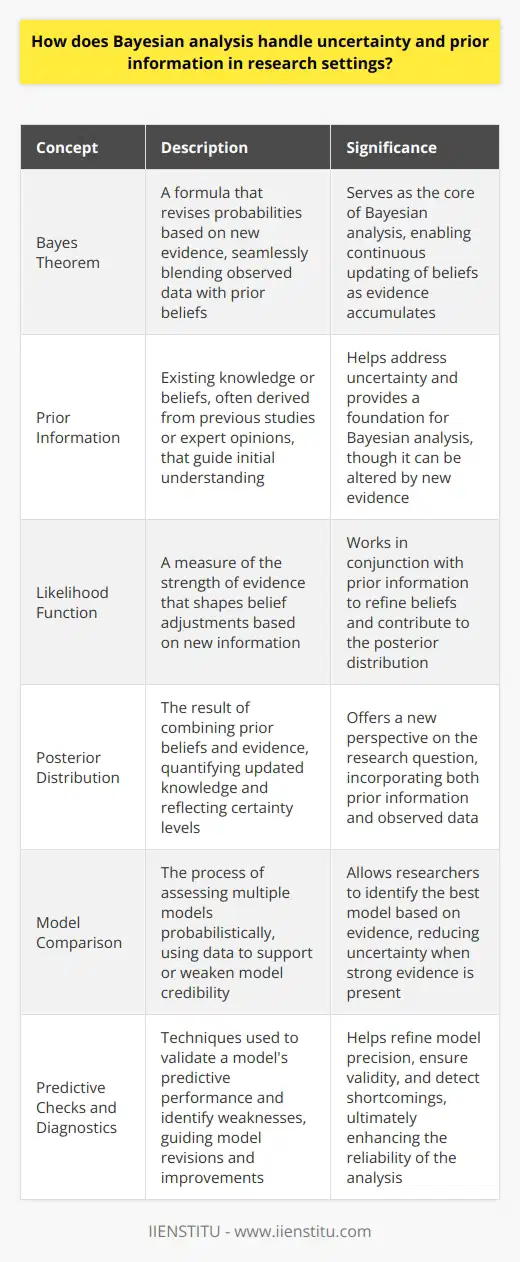

How does Bayesian analysis handle uncertainty and prior information in research settings?

Understanding Bayesian Analysis

Bayesian analysis wields a distinctive approach. It manages uncertainty using probabilities. Researchers appreciate its ability to incorporate prior knowledge. Prior information forms the foundation of Bayesian statistics.

Bayes' Theorem: The Core

At its core lies Bayes' Theorem. This formula revises probabilities with new evidence. It seamlessly blends observed data with prior beliefs. Bayes' Theorem grounds itself in updating. As evidence accumulates, beliefs become more informed.

Incorporating Prior Information

Researchers start with prior probabilities. These embody existing knowledge or beliefs. Prior information may come from previous studies. It can also arise from expert opinions. The prior guides initial understanding.

- Prior helps address uncertainty.

- It is not set in stone.

- Evidence alters the prior belief.

Embracing New Evidence

As data emerges, researchers adjust their beliefs. This involves the likelihood function. Likelihood measures the evidence's strength. It shapes belief adjustments from the new information. Bayesian analysis thrives on continuous updating.

- Analysis refines beliefs with data.

- Likelihood and prior work together.

- Posterior distribution becomes the new focus.

The Posterior Distribution: A New Perspective

The result is the posterior distribution. It combines prior beliefs and evidence. The posterior quantifies updated knowledge. It offers a probability distribution reflecting certainty levels.

Handling Model Uncertainty

Bayesian methods can compare multiple models. Each model has its own probability. Data either supports or weakens model credibility. Model comparison becomes straightforward with Bayesian methods.

- Researchers assess models probabilistically.

- Data informs the best model choice.

- Uncertainty lessens with strong evidence.

Predictive Checks and Diagnostics

Bayesian analysis uses predictive checks. These validate the model's predictive performance. Diagnostics help identify model weaknesses. They guide model revisions and improvements.

- Predictive checks affirm model validity.

- Diagnostics detect shortcomings.

- The process refines model precision.

Advantages in Research Settings

Bayesian methods shine in research. They naturally handle parameter uncertainty. Prior distributions aid in defining parameter values. They reduce ambiguity in decision-making.

Adaptability and Flexibility

Bayesian analysis adapts easily. It can handle complex statistical models. Flexible modeling options become available. Analysts can explore a broader hypothesis range.

- Flexibility elevates analytical power.

- Complex models become manageable.

- Hypothesis exploration broadens.

Transparency and Clarity

Communicating uncertainty is clearer. Bayesian methods openly discuss prior assumptions. Results interpretation focuses on probability. This clarity helps inform decision-making.

- Transparency builds trust in findings.

- Probabilities make results understandable.

- Decisions rest on clearer ground.

Conclusion: A Paradigm for Uncertain Times

Bayesian analysis brings a robust framework. It skillfully navigates the seas of uncertainty. By making use of priors and new data, it enhances inference. Research benefits from its nuanced approach to uncertainty. Bayesian methods will likely continue to spread in diverse research fields. They provide a solid bedrock for making informed decisions amidst uncertainty.

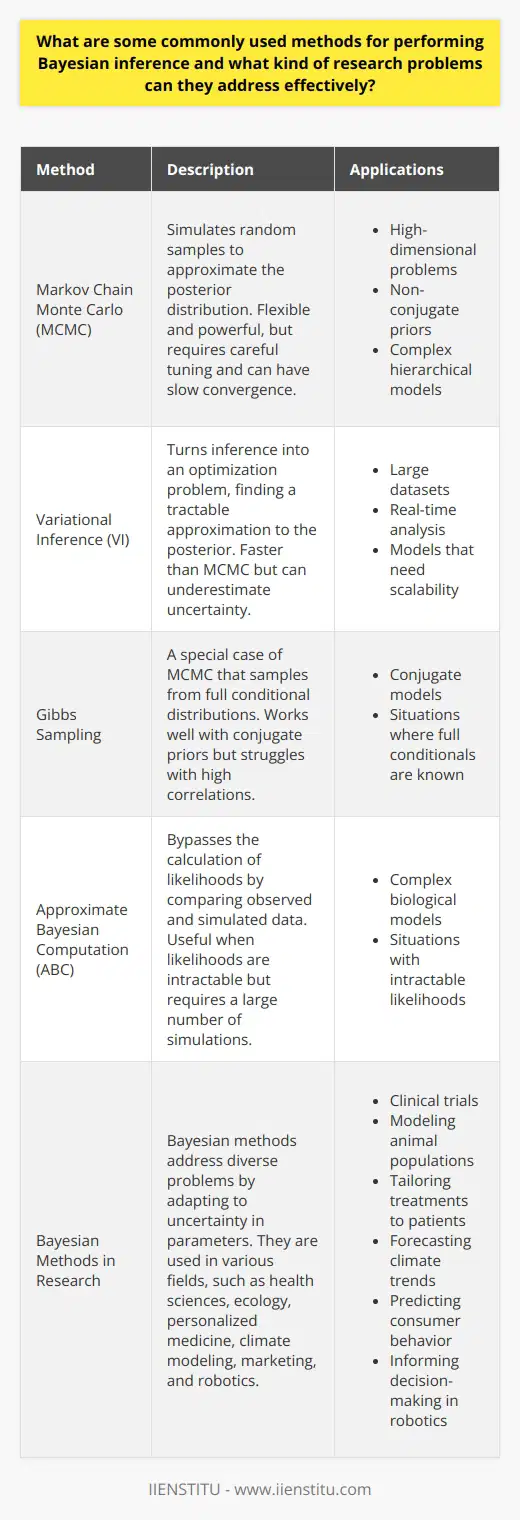

What are some commonly used methods for performing Bayesian inference and what kind of research problems can they address effectively?

Bayesian Inference

Bayesian inference stands as a statistical paradigm. It affirms probabilities for a hypothesis. These probabilities reflect a degree of belief. Bayesian inference contrasts with frequentist methods. It updates the state of belief after obtaining new data.

Popular Methods

Markov Chain Monte Carlo (MCMC)

This method simulates random samples. Samples approximate the posterior distribution. MCMC is flexible and powerful. It handles complex models. Yet, it often requires careful tuning. Convergence can be slow for it.

Applications:

- High-dimensional problems

- Non-conjugate priors

- Complex hierarchical models

Variational Inference (VI)

VI turns inference into an optimization problem. It finds a tractable approximation to the posterior. VI is faster than MCMC. But it provides an approximation. This can lead to underestimation of uncertainty.

Applications:

- Large datasets

- Real-time analysis

- Models that need scalability

Gibbs Sampling

Gibbs Sampling is a special MCMC case. It samples from full conditional distributions. It works well with conjugate priors. Convergence can be faster here. Yet, it struggles with high correlations.

Applications:

- Conjugate models

- Situations where full conditionals are known

Approximate Bayesian Computation (ABC)

ABC bypasses the calculation of likelihoods. It compares observed and simulated data. Useful when likelihoods are intractable. However, it requires a large number of simulations.

Applications:

- Complex biological models

- Situations with intractable likelihoods

Addressing Research Problems

Bayesian methods address diverse problems. They adapt to uncertainty in parameters. Health sciences use these for clinical trials. Ecologists apply them to model animal populations.

Personalized Medicine

Doctors tailor treatments to patients. Bayesian statistics support this personalization. They adapt to newly collected patient data.

Climate Modelling

Bayesian models analyze climate data. They incorporate prior scientific knowledge. They forecast future climate trends.

Marketing

Companies predict consumer behavior. Bayesian methods account for prior market data. They refine these predictions over time.

Robotics

Robots learn from sensory data. Bayesian inference informs decision-making. It balances prior knowledge and new observations.